Full-stack data scientist, passionate about driving innovation through end-to-end AI solutions.

Hi! I'm Ajay Chaudhary, a full-stack data scientist skilled in building data pipelines, training advanced machine learning models, and deploying them using tools like Flask, FastAPI, and cloud platforms. With a strong foundation in Python, SQL, and deep learning, I focus on delivering end-to-end AI solutions that solve real problems

A small selection of recent projects

Attendance System

An automated attendance Facial Recognition monitoring system using Python, OpenCV, and deep learning that detects, identifies, and logs faces in real-time.

Number Plate Detection

A real-time vehicle number plate detection system using YOLOv8 and Tesseract OCR that identifies and extracts license numbers from input video feeds.

Finance chatbot

A conversational finance chatbot built with a fine-tuned Zephyr-7B model using the FIQA dataset that provides real-time answers and financial guidance.

Brain Tumor Detection

A deep learning-based CNN model that detects brain tumors from MRI scans with high accuracy and The system provides consistent results and is designed for smooth integration.

Flight Price Prediction ML

A machine learning model that predicts flight ticket prices based on features like airline, duration, stops, and departure time. The system uses feature engineering , regression algorithms.

Pneumonia Detection

A pneumonia detection system using Detectron2 and Faster R-CNN that identifies infected regions in chest X-rays with precision and includes real-time visualization of predictions.

What I Work With(Toolbox)

- Handling real-world datasets requires precision, efficiency, and deep understanding of data structures. With the use of Python, along with powerful libraries like Pandas and NumPy, massive volumes of raw, unstructured data were cleaned, transformed, and made analysis-ready. The structured pipeline enabled rapid prototyping of ML models and deep insights into key trends, all while maintaining high code readability and modular design. These tools formed the backbone of every data project executed.Python, Pandas, NumPyCore Tools for Data Analysis & Manipulation

- Visual storytelling played a major role in making data science accessible to all stakeholders. Using Matplotlib and Seaborn, complex analyses were transformed into elegant, publication-quality visuals, while Plotly enabled interactive dashboards for real-time exploration. Every visualization delivered not only insights, but also clarity — making decision-making faster and more informed. The skillful use of color theory, labeling, and plot selection made a strong impact throughout the data lifecycle.Matplotlib, Seaborn, PlotlyData Visualization & Insight Communication

- Advanced predictive modeling was driven by a well-rounded ML toolkit. Scikit-learn laid the foundation with pipelines and metrics, while XGBoost and LightGBM powered high-performance, scalable models suitable for real-world deployment. Feature selection, cross-validation, hyperparameter tuning, and interpretability tools like SHAP were used to ensure that models were not only accurate but trustworthy. The approach balanced data science theory with engineering best practices for impactful results.Scikit-learn, XGBoost, LightGBMMachine Learning, Modeling & Evaluation

- One of the standout aspects of full stack data science was the seamless transition from training models to serving them live. Flask and FastAPI were used to wrap ML models into clean, production-ready REST APIs with proper routing, CORS handling, and documentation. This enabled real-time inference in both web apps and external systems. The backend code followed modular practices, making it easy to scale, maintain, and deploy in both development and production environments.Flask, FastAPIModel Deployment & API Architecture

- AI isn’t just about models — it's about how users interact with them. Clean and responsive frontend interfaces were built using HTML, CSS, and JavaScript to enable seamless interaction with deep learning and NLP models. These interfaces powered applications like chatbots, summarizers, and Q&A systems, allowing real-time communication between users and language models via REST APIs. Emphasis was placed on intuitive design, smooth UX, and accurate feedback delivery from the backend models — turning complex AI functionality into user-friendly tools.Deep Learning & NLP IntegrationUser Interfaces for AI-Powered Applications

My Education

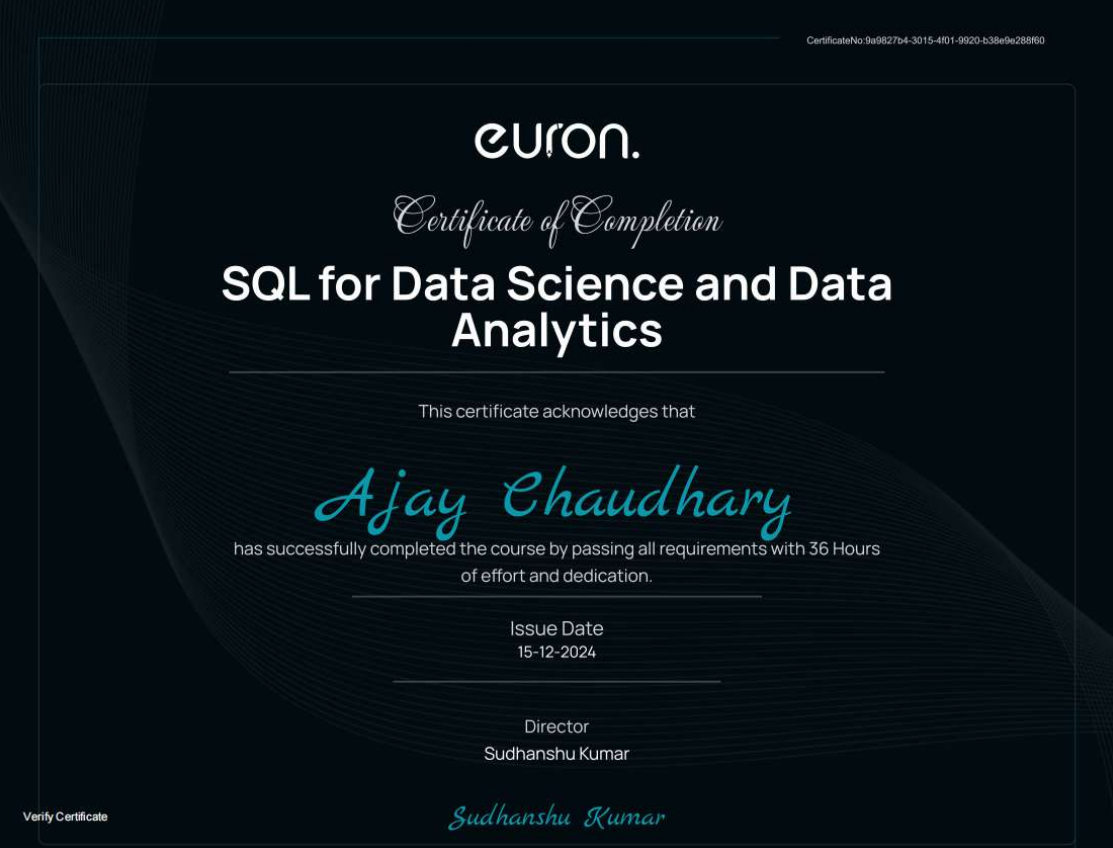

My Verified Certifications

Full Stack Data Science Masters

This certificate confirms successful completion of an intensive Full Stack Data Science program, covering Python, SQL, Machine Learning, Deep Learning, and deployment tools.

View Certificate

Python Basic To Advance

Certifies a strong understanding of Python programming concepts from fundamentals to advanced topics, including OOP, file handling, error handling, and modules.

View Certificate

Sql For Data Science

Verifies proficiency in SQL with a focus on using databases for data analysis, query optimization, joins, window functions, and applying SQL in real-world business scenarios.

View Certificate

Mastering PowerBI

Demonstrates the ability to use Microsoft Power BI to create data dashboards, reports, and visualizations, and connect data sources for business intelligence purposes.

View Certificate

Master OOP In python

Acknowledges understanding of Object-Oriented Programming principles like classes, inheritance, encapsulation, and polymorphism in Python.

View Certificate

ML & Dl Basic To Advance

Indicates knowledge of foundational Machine Learning and Deep Learning concepts,including supervised unsupervised learning, neural networks, and model evaluation techniques.

View Certificate

My AI Workflow

Data & Understanding

- Problem understanding and defining goals

- Data collection and annotation (CSV, images, COCO JSON)

- Data cleaning, EDA, and visualization

- Class balancing and feature engineering

- Exploratory model training and baseline performance checks

- Hyperparameter tuning and architecture selection

- Preparing data loaders, augmentations, and split strategies

Modeling & Training

- Model selection: CNN, Faster R-CNN, LLMs (Zephyr-7B)

- Training and hyperparameter tuning

- Evaluation using accuracy, IOU, F1-score, confusion matrix

- Experiment tracking and version control using Git/DVC

- Cross-validation and early stopping techniques

- Model ensembling or post-processing if required

- Documentation of results and comparison with baselines

Deployment & Monitoring

- Deploying via Flask, Streamlit, or Gradio

- Integration with Gemini API or Chatbot UI

- Dockerizing the app for environment consistency

- Hosting on Lightning AI, Render, or HuggingFace Spaces

- Monitoring performance & collecting feedback

- Retraining and pushing model updates

- Maintaining logs, dashboards, and alert systems